Many attempts have been made to convert dance into a video, or generate a video using the information taken from dance, utilizing various methods. Starting with mathematics, architecture, and engineering in the early days, machine learning and AI have been actively used to transform and generate choreography these days.

Recently, a new method of video generation called Diffusion Model has emerged and is updating the way of converting dance motion and movement into video.

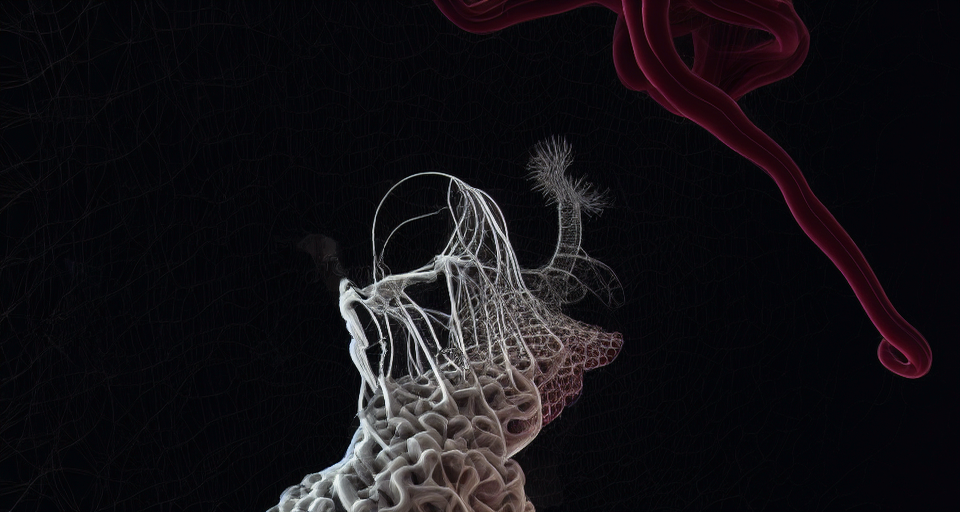

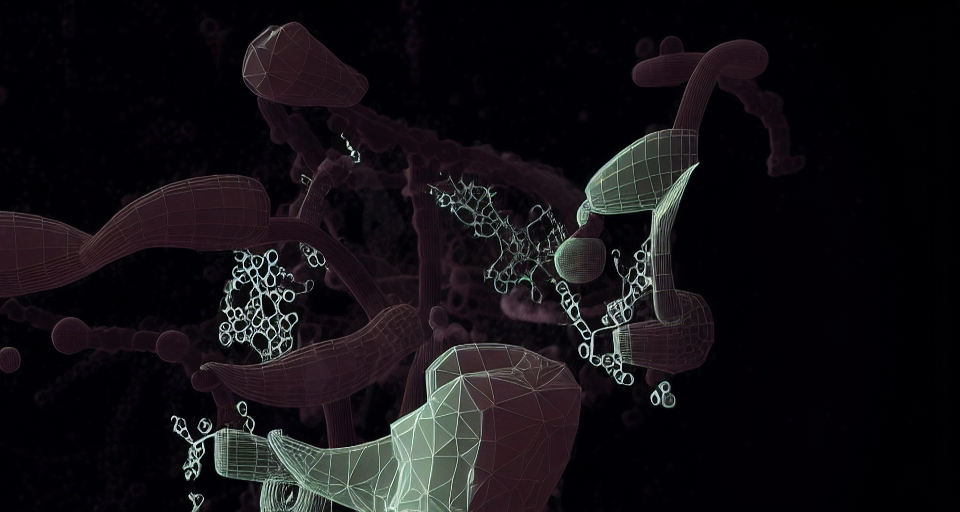

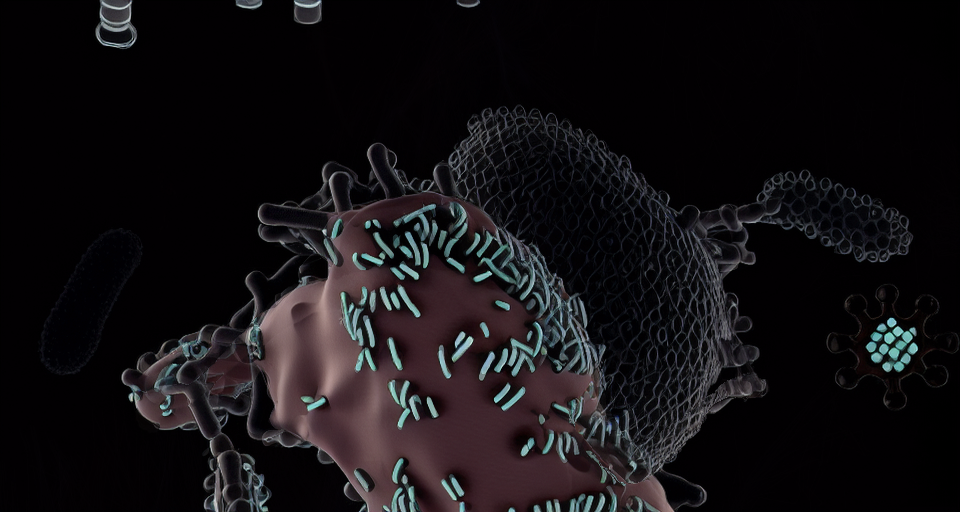

This work explores the possibilities of new video expression by converting body data into a video by integrating the latest algorithms and other techniques such as fluid simulation, image analysis, and artificial simulation.

Credits

Direction, Music and visual programming: Daito Manabe

Server side programming: 2bit

Dancer: Shingo Okamoto and Sara (ELEVENPLAY)